Member-only story

GitLab’s Docker Autoscaler executor on AWS [Beta]

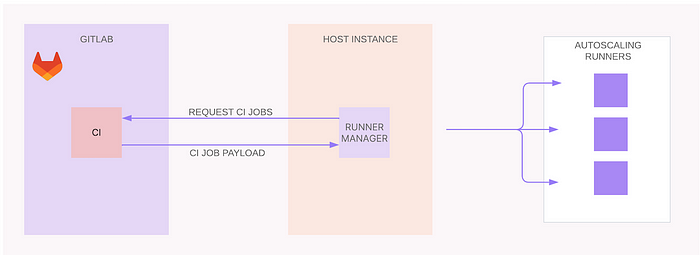

GitLab Runner with autoscaling capability allows for efficient scaling of resources to execute continuous integration (CI) and continuous deployment (CD) jobs within the GitLab ecosystem. The GitLab Runner serves as the agent responsible for running CI/CD jobs defined in your GitLab instance. By leveraging Gitlab Docker auto scaler executor, you can dynamically provision and de-provision computing resources based on the workload, optimizing resource utilization and reducing costs.

The Docker Autoscaler executor is a dynamic, autoscaling-enabled Docker executor designed to spontaneously generate instances as needed to fulfill tasks managed by the runner manager. Utilizing fleeting plugins, the Docker Autoscaler orchestrates autoscaling operations. Fleeting represents a collective of autoscaled instances, employing plugins that cater to various cloud providers such as Google Cloud Platform (GCP), AWS, and Azure.

Currently, this executor mode is in beta phase, I thought of getting a quick check on the development and tried to host on AWS. So in the article, I will take you through the steps required for an EC2 instance with custom AMI and execute the runners on the autoscaling group.

High-Level Internals

At a broad level, the operation unfolds as follows: The Taskscaler integrates seamlessly into the GitLab runner program, assuming the role of an autoscaling mechanism responsible for dynamically provisioning instances through the concept of “fleeting” and effectively managing task distribution among them. Fleeting serves as an abstraction layer tailored to cloud providers’ instance groups.

Empowered by specific fleeting plugins designed for Cloud Service Providers (CSPs) such as AWS, Azure, GCP, and more, the Taskscaler establishes communication with Cloud Endpoints. It then orchestrates the seamless provisioning of resources on the chosen platform. Notably, this framework is flexible, allowing users to construct their own fleeting plugins for alternate cloud environments, adding a layer of adaptability and extensibility to the system.